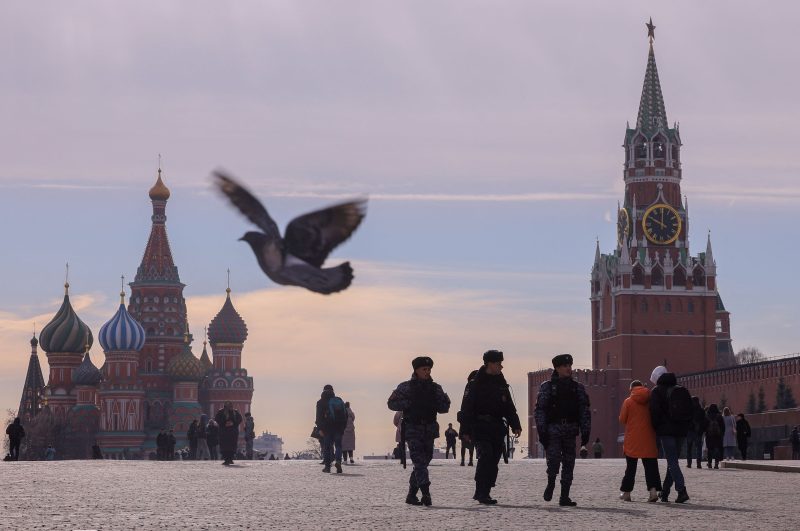

SAN FRANCISCO — The Russian government has become far more successful at manipulating social media and search engine rankings than previously known, boosting lies about Ukraine’s military and the side effects of vaccines with hundreds of thousands of fake online accounts, according to documents recently leaked on the chat app Discord.

The Russian operators of those accounts boast that they are detected by social networks only about 1 percent of the time, one document says.

That claim, described here for the first time, drew alarm from former government officials and experts inside and outside social media companies contacted for this article.

“Google and Meta and others are trying to stop this, and Russia is trying to get better. The figure that you are citing suggests that Russia is winning,” said Thomas Rid, a disinformation scholar and professor at Johns Hopkins University’s School of Advanced International Studies. He added that the 1 percent claim was probably exaggerated or misleading.

The undated analysis of Russia’s effectiveness at boosting propaganda on Twitter, YouTube, TikTok, Telegram and other social media platforms cites activity in late 2022 and was apparently presented to U.S. military leaders in recent months. It is part of a trove of documents circulated in a Discord chatroom and obtained by The Washington Post. Air National Guard technician Jack Teixeira was charged Friday with taking and transmitting the classified papers, charges for which he faces 15 years in prison.

The Discord Leaks

1/5

End of carousel

The revelations about Russia’s improved misinformation abilities come as Twitter owner Elon Musk and some Republicans in Congress have accused the federal government of colluding with the tech companies to suppress right-wing and independent views by painting too many accounts as Russian attempts at foreign influence. A board set up to coordinate U.S. government policy on disinformation was disbanded last year after questions were raised about its purpose and a coordinated campaign was aimed at the person who had been selected to lead it.

Twitter employees also say they worry that Musk’s cutbacks have hurt the platform’s ability to fight influence operations. Propaganda campaigns and hate speech have increased since Musk took over the site in October, according to employees and outside researchers. Russian misinformation promoters even bought Musk’s new blue-check verifications.

Many of the 10 current and former intelligence and tech safety specialists interviewed for this article cautioned that the Russian agency whose claims helped form the basis for the leaked document may have exaggerated its success rate.

But even if Russia’s fake accounts escaped detection only 90 percent of the time instead of 99 percent, that would indicate Russia has become far more proficient at disseminating its views to unknowing consumers than in 2016, when it combined bot accounts with human propagandists and hacking to try to influence the course of the U.S. presidential election, the experts said.

“If I were the U.S. government, I would be taking this seriously but calmly,” said Ciaran Martin, former head of the United Kingdom’s cyberdefense agency. “I would be talking to the major platforms and saying, ‘Let’s have a look at this together to see what credence to give these claims.’”

Martin added, “Don’t automatically equate activity with impact.”

The Defense Department declined to comment. TikTok, Twitter and Telegram, all named in the document as targets of Russian information operations, did not respond to a request for comment.

In a statement, YouTube owner Google said, “We have a strong track record detecting and taking action against botnets. We are constantly monitoring and updating our safeguards.”

With the average internet user spending more than two hours daily on social media, the internet has become perhaps the leading venue for conversations on current events, culture and politics, raising the importance of influencing what is seen and said online. But little is known about how a specific piece of content gets shown to users. The big tech companies are secretive about the algorithms that drive their sites, while marketing companies and governments use influencers and automated tools to push messages of all kinds.

The possible presence of disguised propaganda has evoked widespread concern in recent months about TikTok, whose Chinese ownership has prompted proposed bans in Congress, and Twitter, whose former trust and safety chief Yoel Roth told Congress in February that the site still harbored thousands or hundreds of thousands of Russian bots.

The document offers a rare candid assessment by U.S. intelligence of Russian disinformation operations. The document indicates it was prepared by the Joint Chiefs of Staff, U.S. Cyber Command and Europe Command, the organization that directs American military activities in Europe. It refers to signals intelligence, which includes eavesdropping, but it does not cite sources for its conclusions.

It focuses on Russia’s Main Scientific Research Computing Center, also referred to as GlavNIVTs. The center performs work directly for the Russian presidential administration. It said the Russian network for running its disinformation campaign is known as Fabrika.

The center was working in late 2022 to improve the Fabrika network further, the analysis says, concluding that “The efforts will likely enhance Moscow’s ability to control its domestic information environment and promote pro-Russian narratives abroad.”

The analysis said Fabrika was succeeding even though Western sanctions against Russia and Russia’s own censorship of social media platforms inside the country had added difficulties.

“Bots view, ‘like,’ subscribe and repost content and manipulate view counts to move content up in search results and recommendation lists,” the summary says. It adds that in other cases, Fabrika sends content directly to ordinary and unsuspecting users after gleaning their details such as email addresses and phone numbers from databases.

The intelligence document says the Russian influence campaigns’ goals included demoralizing Ukrainians and exploiting divisions among Western allies.

After Russia’s 2016 efforts to interfere in the U.S. presidential election, social media companies stepped up their attempts to verify users, including through phone numbers. Russia responded, in at least one case, by buying SIM cards in bulk, which worked until companies spotted the pattern, employees said. The Russians have now turned to front companies that can acquire less detectable phone numbers, the document says.

A separate top-secret document from the same Discord trove summarized six specific influence campaigns that were operational or planned for later this year by a new Russian organization, the Center for Special Operations in Cyberspace. The new group is mainly targeting Ukraine’s regional allies, that document said.

Those campaigns included one designed to spread the idea that U.S. officials were hiding vaccine side effects, intended to stoke divisions in the West. Another campaign claimed that Ukraine’s Azov Brigade was acting punitively in the country’s eastern Donbas region.

Others, aimed at specific countries in the region, push the idea that Latvia, Lithuania and Poland want to send Ukrainian refugees back to fight; that Ukraine’s security service is recruiting U.N. employees to spy; and that Ukraine is using influence operations against Europe with help from NATO.

A final campaign is intended to reveal the identities of Ukraine’s information warriors — the people on the opposite side of a deepening propaganda war.

An earlier version of this story included a quote from Thomas Rid, a disinformation scholar and professor at Johns Hopkins University’s School of Advanced International Studies, without noting that he believed the 1 percent claim was likely exaggerated or misleading. That information has been added.